Xinying Hou

xyhou [at] umich [dot] edu

PhD Candidate

School of Information

University of Michigan

Ann Arbor

News

01/2026Our paper on how K–12 teachers from three cultural contexts approach GenAI education has been accepted to CHI 2026!.

11/2025Excited to share that the AI literacy activities I created have been accepted into the Hour of AI Activity Catalog.

10/2025Grateful to share that I’ve been selected as a UMSI Dean’s Fellowship awardee. Truly appreciate the support that made this possible!

10/2025One paper on teaching prompting literacy to middle school students got accepted to AAAI 2026!

9/2025 One paper on applying multimodal AI to support programming and one tutorial workshop about CodeTailor got accepted to SIGCSE 2026. See you in St. Louis, Missouri!

8/2025 Just passed my dissertation proposal! That’s milestone 4 of 5 in my PhD journey!

7/2025 I am serving on the EAAI 2026 PC, collocated with AAAI-26

7/2025 I coordinated the 1st International Workshop on AI Literacy Education For All in AIED 2025. Thanks all for coming and contributing!

4/2025 One Full paper and one Late-breaking paper are accepted to AIED 2025!

Selected Publications

Check my Google Scholar profile for a full list of publications and see who's citing them!

* Equal Contribution

Underlined names indicate that I contributed in a mentoring capacity

Exploring Student Choice and the Use of Multimodal Generative AI in Programming Learning

The 57th ACM Technical Symposium on Computer Science Education

With recent developments, GenAI applications have begun supporting multiple modes of communication, known as multimodality. In this work, we explored how undergraduate programming novices choose and work with multimodal GenAI tools, and their criteria for choices. We selected a commercially available multimodal GenAI platform for interaction, as it supports multiple input and output modalities, including text, audio, image upload, and real-time screen-sharing. Through 16 think-aloud sessions that combined participant observation with follow-up semi-structured interviews, we investigated student modality choices for GenAI tools when completing programming problems and the underlying criteria for modality selections.

An LLM-Enhanced Multi-agent Architecture for Conversation-Based Assessment

International Conference on Artificial Intelligence in Education

Conversation-based assessments (CBA), which evaluate student knowledge through interactive dialogues with artificial agents on a given topic, can help address non-effortful formative test-taking and the lack of adaptability in traditional assessment. This work employs evidence-centered design framework with LLM techniques to establish a multi-agent architecture for conversation-based assessment. It includes four LLM agents: two student-facing agents and two behind-the-scenes agents. All agents are monitored by a non-LLM agent (Watcher), which manages the assessment flow through updated instructions to agents and turn control.

Improving Student-AI Interaction Through Pedagogical Prompting: An Example in Computer Science Education

Under Revision

We first proposed pedagogical prompting, a theoretically-grounded new concept to elicit learning-oriented responses from LLMs.

For proof-of-concept learning intervention in a real educational setting, we selected early undergraduate

CS education (CS1/CS2) as the example context. Based on instructor insights, we designed and developed a learning

intervention as an interactive system with scenario-based instruction to train pedagogical prompting

skills. Finally, we assessed its effectiveness with pre/post-tests in a user study of CS undergraduates.

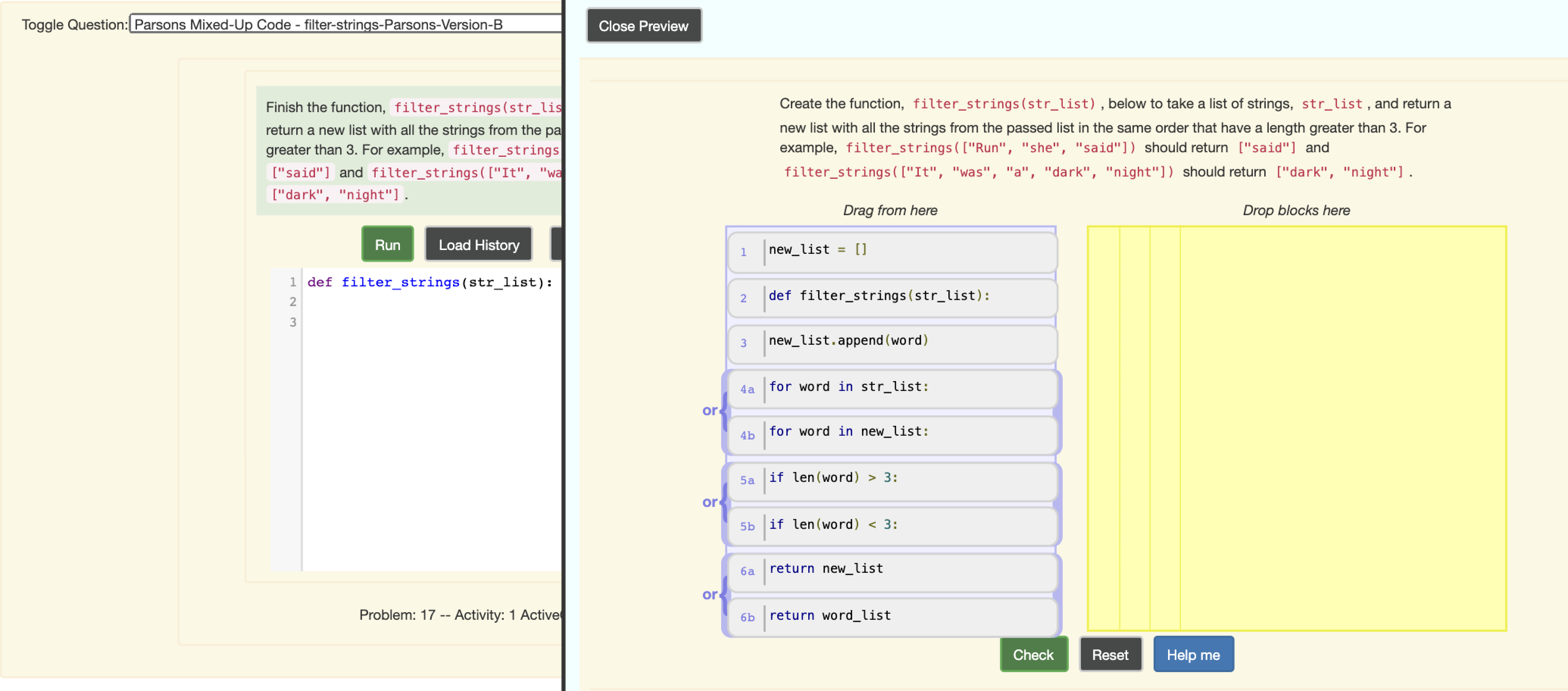

CodeTailor: LLM-Powered Personalized Parsons Puzzles for Engaging Support While Learning Programming

ACM Conference on Learning @ Scale

🏅 Best Paper Nomination

We presented CodeTailor, a system that leverages a large language model (LLM) to provide personalized help to students while still encouraging cognitive engagement. CodeTailor provides a personalized Parsons puzzle to support struggling students. In a Parsons puzzle, students place mixed-up code blocks in the correct order to solve a problem.

CodeTailor distinguishes itself from existing LLM-based products by providing an

active learning opportunity where students are expected to "solve"

the puzzle rather than simply acting as passive consumers by "read-

ing" a direct solution.

How novices use LLM-based code generators to solve CS1 coding tasks in a self-paced learning environment

ACM Koli Calling International Conference on Computing Education Research

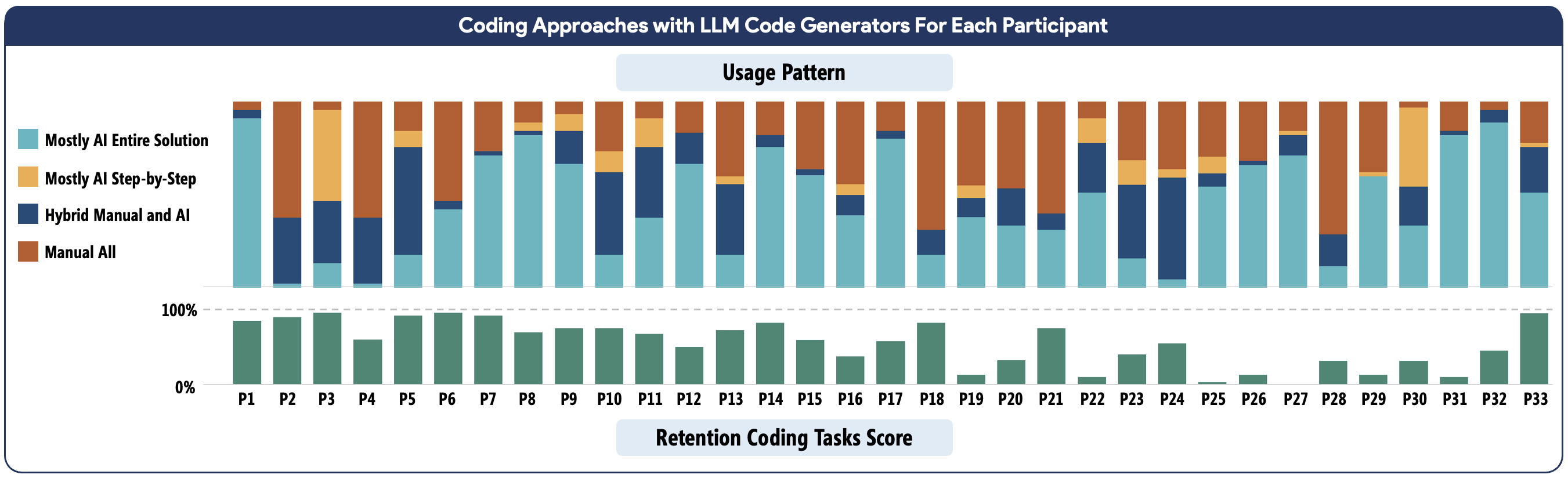

We presented the results of a thematic analysis on a data set from 33 learners as they independently learned Python by working on 45 code-authoring tasks with access to an AI Code Generator based on OpenAI Codex. Our analysis reveals four distinct coding approaches when writing code with an AI code generator: AI Single Prompt; AI Step-by-Step; Hybrid; and Manual coding, where learners wrote the code themselves.

Using Adaptive Parsons Problems to Scaffold Write-code Problems

ACM Conference on International Computing Education Research

In this paper, we explore using Parsons problems to scaffold novice programmers who are struggling while solving write-code problems. Parsons problems, in which students put mixed-up code blocks in order, can be created quickly and already serve thousands of students while other types of programming support methods are expensive to develop or do not scale. We conducted studies in which novices were given equivalent Parsons problems as optional scaffolding while solving write-code problems. We investigated when, why, and how students used the Parsons problems as well as their perceptions of the benefits and challenges.

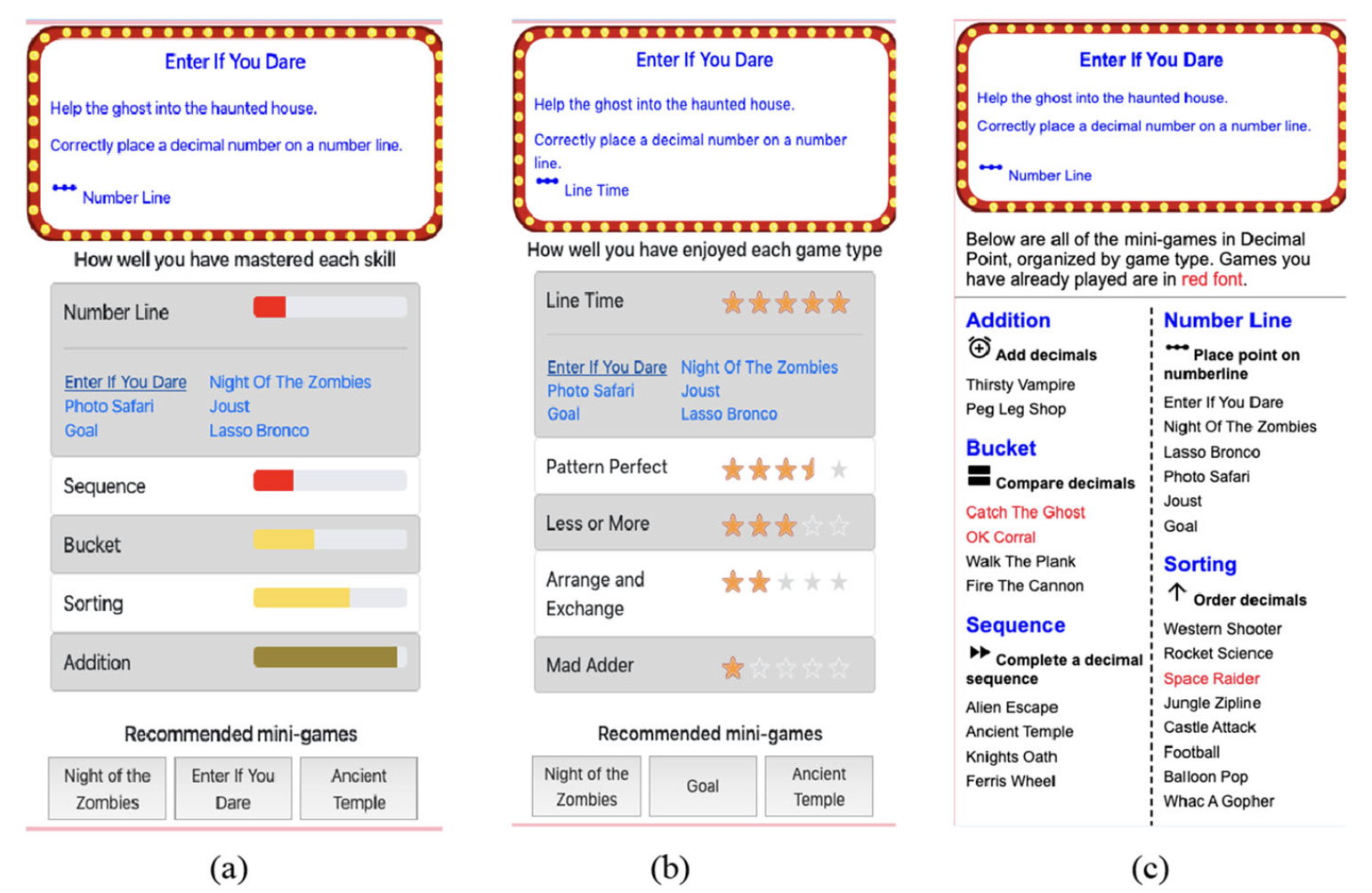

Assessing the effects of open models of learning and enjoyment in a digital learning game

International Journal of Artificial Intelligence in Education

In this math digital learning game, one version encouraged playing and learning through an open learner model, while one encouraged playing for enjoyment through an analogous open enjoyment model.

We compared these versions to a control version that is neutral with respect to learning and enjoyment. The learning-oriented group engaged

more in re-practicing, while the enjoyment-oriented group demonstrated more exploration of different mini-games. In turn, our analyses have led

to preliminary ideas about how to use AI to provide recommendations that are more

aligned with students’ dynamic learning and enjoyment states and preferences.